JoergS5 parallel five bar scara

-

@joergs5 said in JoergS5 parallel five bar scara:

how to make stiff arms and hinges without backlash.

My very naive implementation with preloaded thrust bearing works amazingly good for the elbows. It is definitely not the right way of doing it and I expect the bearings to break pretty fast.

I got some fancy angular contact bearings on aliexpress, very nice ABEC-5 bearings, just $5 a piece. DexTAR use two of those in each elbow, preloaded, as they are supposed to be used. But my prototype with those bearings and printed parts does not work very well. PLA is too soft.You are right chasing backlash! A small backlash or flex is amplified further out.

Don't forget to make the tower the arms are attached to as stiff as possible. My tower is a bit soft with 3 12 mm rods and a single 3060 extrusion to the top. It's hard to find space for the tower if you want good mobility for the arms. Reprap morgan has an interesting arrangement of the pipes in it's tower, triangulation is good.

"Neuartige Drehgelenke". Interesting, bushings and flexible joints. I have to dust off my German and have a closer look...

-

@joergs5 said in JoergS5 parallel five bar scara:

diy an optical encoder to define the exact end stops

Double sided tape and a piece of bent metal. That's why I need calibration

-

The calibration is not perfect.

Small configuration errors gets amplified when you get close to singularities. The bent line at the top are ~50mm from where the distal arms are at 180°.

I need a bigger bed

-

@bondus The optical encoder I'm working on is:

I will use an ESP32-CAM for every stepper, let it communication through I2C with Duet (ESP32 in slave mode). The image shows a printout on transparent film. It is mounted on the big round geared part. The camera knows the position: lot of black means high angle, lower means approaching endstop position (left and right in different colors, not shown here). At endstop I will try to find a fine tuned pattern. The ESP32-CAM camera is an OV2640 with 2.2 micrometer pixel size, so exact enough. This construction has the benefit of knowing where the stepper is and approaching the endstops from both sides.

-

@JoergS5, the stuff I pushed to my repo yesterday does not work properly for v3. The obvious changes were easy to do but there are some issues with homing.

I home both arms at the same time using:

G91 ; relative positioning

G1 S1 X300 Y300 F900 ; move quickly to endstop and stop there (first pass)

G1 S2 X-4 Y-4 F900 ; go back a few mm

G1 S1 X300 Y300 F90 ; move slowly to endstop once more (second pass)

G90 ; absolute positioningBut now it stops when one endstop is hit and leaves one axis not-homed, it did not so that before. That behaviour should be controlled by QueryTerminateHomingMove(). I'll dig around and solve it...

By homing both arms at the same time you avoid ending up in impossible positions. Like linear deltas.

-

@bondus Thank you for the warning and details. I will home both arms at the same time to avoid coming into a singularity area. I'll show you the implementation.

-

@JoergS5, I tested how the angle limitations in the firmware works in reality: The arms happily moves into all kind of not allowed angles; it does not work

It does not totally bug out and sends the steppers to impossible positions that it used to do before, but it totally ignores the limits.It looks like RRF relies on LimitPosition(), which is not properly implemented. IsReachable(), that is properly implemented is not used much.

LimitPosition() is easy to implement if the config option Z used. Could that not use the normal m208 configuration?

But if that is not in use we have to rely on the angle limitations to from a given coordinate compute a new coordinate that is within the angle limits. This seems to be quite complicated, and each work mode is different. It's doable though.

We could skip implementing the complicated LimitPosition() if we rely on using a square, or circular, limit area, and rely on that the user has set a reasonable area. The lazy solution

I have a homing position that is at the extreme angles of both arms, the position the head has at those angle will most certainly be outside a reasonable print area (it likes to fight with my desk lamp). For that to work it must be possible to do a move from outside any limits defined by coordinates to inside the limited area.

Your optical encoder would help since you can have a homing position at a reasonable coordinate. I simplified version that just tells if its left of, right of or on the homing position would also make homing easier.

-

@bondus I propose we search the reason. I must confess I did not fully understand the limit methods, I copied them from the Scara code. I hope you can live with the errors by limiting your print area. I will try to find the reason later, after I finish the build.

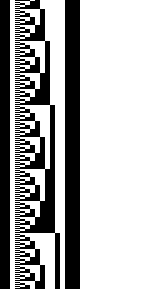

The encoder will be built with three ESP32-CAM (I will use the Z endstop with it also) and one STM32F7 board which communicates to Duet. I will encode the encoder printout with a binary pattern, such that + and - degree can be directly read. Something like the attached image, but rounded around the middle point.

The black bars left and right mark the ends, in the middle the first bit is + or -, the other bits calculate to the degree.

The black bars left and right mark the ends, in the middle the first bit is + or -, the other bits calculate to the degree. -

@JoergS5, a binary encoded position encoder.

I wonder how you will make use of that with the Duet/RRF? What signals do you plan to send to the board? Conditional gcode would make it useful in a homing script.

-

@bondus Duet shall send a M260 over I2C to the ESP32 or STM32 board, the external board will set the steppers to the endstops, then sending ok back when finished. I am not sure how the external board moves the steppers (could be some crude rr_ commands, but should be easier).

-

@bondus I will try the Smuff way serial connection https://github.com/technik-gegg/SMuFF-Ifc to send and receive data.

The optical encoder is developing, if calculated correctly, 1/10 degree or a bit better is achievable (encoding with gray code: https://en.wikipedia.org/wiki/Gray_code ). -

In case someone wants to follow my optical encoder procedure: I've created a github repository here: https://github.com/JoergS5/OpticalEncoder with a first png gray code for printout and the java program to create it.

-

@JoergS5, you are remaking a classic old rotational encoder with gray coding.

With a powerful CPU and a high resolution linear sensor it must be possible to create a better pattern. Using a clever pattern, some image processing, error correction, viterbi, e.t.c. it must be possible to get far better resolution. The mechanical industry have a lot of old tech that needs to be renewed.To be useful in our case a 1/10 deg resolution of the arms is not good enough for positioning.

-

@joergs5. Do you have any datasheets on those linear sensors? It's quite an interesting problem

-

@bondus For precision, "I have an ace in my sleeve" (I hope Deepl translated this correctly

). The encoder is meant to find the position when the printer starts working: where am I roughly, and in which direction do I have to turn the steppers. For fine positioning, I will use a finer structure like opened old chips and a pattern recognition. That's the reason why I want to use STM32, for the needed processing power.

). The encoder is meant to find the position when the printer starts working: where am I roughly, and in which direction do I have to turn the steppers. For fine positioning, I will use a finer structure like opened old chips and a pattern recognition. That's the reason why I want to use STM32, for the needed processing power.

I wanted to use the ATM212 encoder which I bought, but it looses the absolute position information between the power offs, and it is not exact and depends on the speed of the movements.I will use simple cameras as linear sensors, like OV2640 and if this is not sufficient, I'll try OV5640. Line sensor were used in the first scanners, but I cannot find them to buy anymore.

-

@JoergS5, using a camera to read the patterns must limit the sampling frequency quite a lot, fps of cameras are normally not very high. But on the other hand you can potentially get very high resolution, there are a lot of pixels of input.

I worked with early CMOS sensors back in the '00s, even the 90s. You could do a lot of tricks such as just reading a few lines and get much higher "frame" rates. But things must have changed a lot since then

-

@bondus my first tests with ESP32-CAM were unsuccessful, I will need more research. If you have hints how I can improve the encoder, please tell me!

My current approach is:

- make the printout as big as possible, the resolution is better then. 30 cm diameter means maximum resolution at 600 dpi: 22231 dots, one dot means 1/60 degree. The dots could be subsampled by the camera. I thought about using a neuronal network solution.

- buy ArduCam OV2640 and OV5647, where the objective can be removed to go with macro focus. For OV5647 I will need a Raspberry Pi Zero W each. There is a breakout version of OV2640.

If resolution is too low, I will wait for the new sensor OV48B ! (a joke, main problem will not be the resolution, but the focus)