Where is the difference - 10 times X1 vs 1 times X10

-

I did the same with the control system on the MCP/MTT/Renishaw machine. The computer read the whole build file and parsed it into exposure points a controlled distance apart. These were then sent to the optics system as single slice files. Yeah, some where vastly larger than the source data but it also cleaned up small vector issues which the real time controllers really struggled with.

But this did make alsorts of things very easy, such as part suppression, moving and offsetting parts or slice data, changing processing parameters, and reloading build files mid print for more serious changes.

-

@droftarts said in Where is the difference - 10 times X1 vs 1 times X10:

Some 3DSystems printers do (or, at least, did) that, too. Leveraged the host CPU for the complex stuff, sent individual step commands in what was basically a big spreadsheet to a dumb microcontroller on board. Then the board only has to run the 'spreadsheet', and monitor temperatures and any other inputs.

that's the dudes that purchased bitsfrombytes? the first 32bit (pic32mx based) electronics in reprap/repstrap world

When I first came in contact with TT machines (UP Plus 2) I did some research as they were in some things age in front of the reprap community and what I found is that they used the same approach on these small "home" printers as professional 500+k machines are using, and found that most of those huge machines do exactly that - just execute the "spreadsheet" as you call it, and that everything is done in the "slicer". On the other hand, UP Plus 2 uses ncp 32bit arm to execute that spreadsheet while most of home 3d printers try to do everything from parsing to planing to executing with 8bit atmega

-

@arhi It makes sense when you've got a dedicated PC connected to the printer (like in the CNC world), but less sense when you've got a general purpose PC doing it. Because if you're doing all the computation up front and creating a huge file, you've either got to stream the data to the microcontroller (so the PC shouldn't really be used for other things to avoid hiccups, which ties up a potentially expensive PC), or your microcontroller gets more complicated as you have to add things like storage and ways of listing what's on the storage. Or you get a second PC to handle the data streaming. Or you get a smarter microcontroller. All options have their merits and deficiencies!

Ian

-

@droftarts haven't we essentially got what we need already with the Duet 3 and single board computer? The Raspberry pi is likely more powerful than the Windows XP 32 bit system I was using.

This would be easy enough to write in a way that the cleaned gcode could be recomipiled back to a complete gcode file modifications and all. This would mean the software could seperately preparse data offline, making it usefull to all generations of duet and duet 3 without single board computers. ...also appeasing those who get twitchy at not seeng all the gvode prior to run.

-

@droftarts well on those old machines without dedicated computer you were dead in the water but today 128G SD card can be run from 8bit mcu

.. now, no clue what power is required to step trought the "spreadsheet" but if 8bit on 16MHz can parse the g-code, calculate plan, and then step trough it I'm kinda sure it can step trough the precompiled plan

.. now, no clue what power is required to step trought the "spreadsheet" but if 8bit on 16MHz can parse the g-code, calculate plan, and then step trough it I'm kinda sure it can step trough the precompiled plan

What I really didnt' like about UP was that all the printer calibration (size, skew, bed mesh...) is in the slicer so your code is not universal. You have to slice for each specific machine. I for e.g. have both up plus2 and up mini and with rather same bed if I put same size nozzle in generally g-code is identical when they are running smoothieware (as they are now, but mini will be going to duet these days) but if they are running original firmware I have to slice specifically to each printer. It has it's benefits too, there's no fiddling with firmware configuration, everything is point and click (not sure if I could adjust some of the calibration things TT allows with duet) .. anyhow we went faaaaaaaaaar away from original post ... the big take from this thread is the length of the queue

-

@arhi said in Where is the difference - 10 times X1 vs 1 times X10:

that's the dudes that purchased bitsfrombytes?

Yes. Bits from Bytes were based in Clevedon, not far from Bristol (where I am), and grew out of the very early RepRap community at Bath University (Dr Adrian Bowyer et al). I know a couple of people that worked there, including once 3DSystems took them over and effectively mothballed production, keeping it for R&D (though dictated by the US head office) and supporting existing machines in Europe.

Ian

-

@DocTrucker said in Where is the difference - 10 times X1 vs 1 times X10:

@droftarts haven't we essentially got what we need already with the Duet 3 and single board computer? The Raspberry pi is likely more powerful than the Windows XP 32 bit system I was using.

Only if you dedicate the Pi exclusively to running the print and don't try to do anything else that is CPU-intensive in it. Raspian is not a real-time operating system. RRF/DSF run the planning on the Duet (which does run a real-time operating system) so that you can do other things on the Pi at the same time, e.g. camera, complex web interface (GCode visualisation coming soon), and potentially slicing. That's also why we use a dedicated SPI interface instead of USB.

-

@DocTrucker it is what klipper is doing, precompiling the g-code in real-time and pushing the stepper instructions to the stepper boards... so all planning is done on the host (That can be RPI but also a 128core desktop pc with terabyte of ram

)

) -

@arhi said in Where is the difference - 10 times X1 vs 1 times X10:

@DocTrucker it is what klipper is doing, precompiling the g-code in real-time and pushing the stepper instructions to the stepper boards... so all planning is done on the host (That can be RPI but also a 128core desktop pc with terabyte of ram

)

)Running a real-time task such as planning on a system running a non-realtime OS and sending near real-time commands over a shared bus is IMO a dubious thing to do. But of course you can get away with it if you don't have much else competing for CPU and bus time.

-

@droftarts that was a sad day .. I used rapman 3.0 and making rapman reliably print HDPE and PP got me into reprap core team 10 years ago

.. those were the times .. hand made hotends, revolutionary wade's extruder... ah.. memories nope's idea for heated bed ... there was a very strong community around bfb, prusa's first printer was rapman, erik that made ultimaker, first printer was rapman too .. kai parthy the guy who invented wood filled filament and all those lay* filaments was also there ... memories ...

.. those were the times .. hand made hotends, revolutionary wade's extruder... ah.. memories nope's idea for heated bed ... there was a very strong community around bfb, prusa's first printer was rapman, erik that made ultimaker, first printer was rapman too .. kai parthy the guy who invented wood filled filament and all those lay* filaments was also there ... memories ... -

@dc42 said in Where is the difference - 10 times X1 vs 1 times X10:

Running a real-time task such as planning

I have not tried klipper yet so can't comment really but ppl are pretty happy with it so it kinda works. Not sure how exactly.

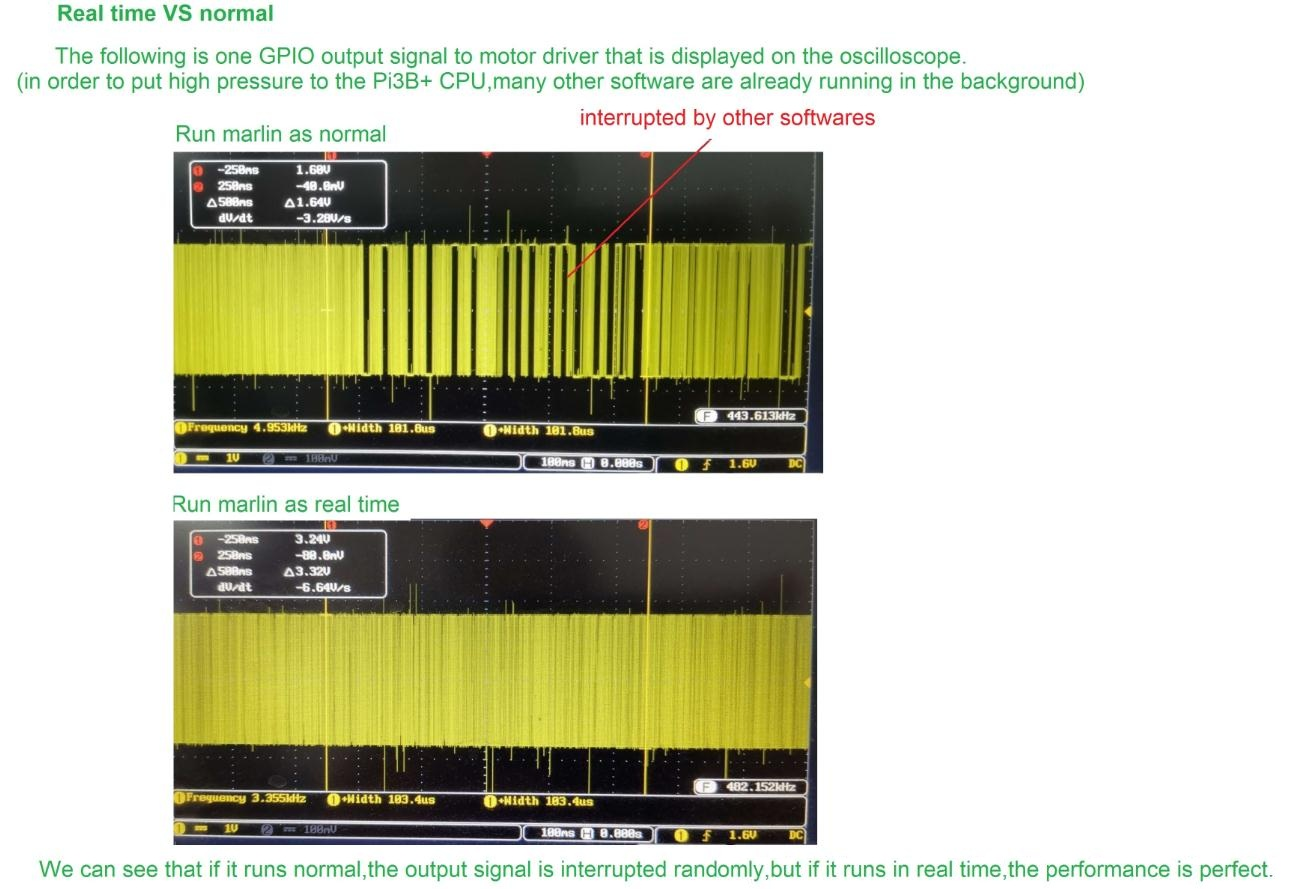

What's more interesting is that there is a firmware (forgot the name) that completely runs on RPI!! they do use real time kernel but they step drivers from GPIO pins on the RPI itself !!! I was very skeptical that can work but I seen the tests and ... I still have hard time believing it

-

@ChrisP, I would disagree on this being a bug. The controllers job is to do what it’s told. If you call for 100x1 it should treat it as 100 moves and include acceleration/deceleration as such. If you want an optimiser that should be a separate processing path. Be it in the slicer or in an operation between the slicer and execution of the print.

Adding the complexity in the controller increases the likely good of defects in the control loop and doesn’t work as expected for a computer processor... to do it’s job as it’s told.

-

@arhi said in Where is the difference - 10 times X1 vs 1 times X10:

What's more interesting is that there is a firmware (forgot the name) that completely runs on RPI!! they do use real time kernel but they step drivers from GPIO pins on the RPI itself !!! I was very skeptical that can work but I seen the tests and ... I still have hard time believing it

If it's using a real-time kernel on the Pi then that seems entirely reasonable to me, if the Pi has enough I/O pins.

Another option would be to use a quad-core Pi with a real-time kernel running on one core and Linux on the other 3.

-

-

Before switching to Duet out of curiosity I ran LinuxCNC on a Pi using a PREEMPT_RT patched kernel with ST L6470 stepper drives connected over SPI. Analog I/O was handled by an STM32F103 'blue pill' board over USB/HID (which provides an upper bound on latency also)

That PREEMPT_RT takes care of the realtime requirements and the system ran flawless. My switch to Duet was more out of curiosity than necessity.Maybe PREEMPT_RT is something to consider for DSF? It will soon be in the mainline kernel, although they keep saying that for 10 years or so.

-

Regards the Raspberry Pi commanding direct to steppers I believe they used one of the memory interfaces.

@dc42 the parsing is not a real-time task. So long as a slice can be prepped at least as quick as the machine process one you don't have an issue.

-

@DocTrucker Running a second, intermediate process to 'clean up the gcode' means, at least some level, altering the gcode. So people have to get comfortable with that, and the hassle of running a second process on their files.

I believe Klipper effectively does all the step generation on the RPi, and uses connected controllers as 'dumb' microcontrollers, feeding them step pulses, though I confess I've barely looked at Klipper. Maybe a way to do this on Duet would be to run the RRF in a virtual machine (so you get to use the printer's config.g), run a simulation of the gcode file (built in to RRF), but record the output to a file, somehow adjust for speed/timing, then have a function in RRF on the board that can 'read' a raw step file. Maybe this could be done through RPi/DSF?

Circling back to the original topic (sort of), what really needs to happen is for Slicers to allow import of CAD files, eg .OBJ, and export G2 and G3 arcs, and G5 cubic bezier curves. Then we get rid of stl approximations, and gcode that has any curves generates a much more compact file, and reduces the problem of buffer or cache underruns. It seems generating steps from G5 (at least, not sure about G2/3 but think they use sin/cos) doesn't come with too much of a computational overhead for the microcontroller (see this from 2016 https://github.com/MarlinFirmware/Marlin/issues/3022 ). So perhaps then it's not necessary to either generate step code on the PC, or have very powerful microcontrollers, once slicers get smarter!

Ian

-

@DocTrucker said in Where is the difference - 10 times X1 vs 1 times X10:

Regards the Raspberry Pi commanding direct to steppers I believe they used one of the memory interfaces.

@dc42 the parsing is not a real-time task. So long as a slice can be prepped at least as quick as the machine process one you don't have an issue.

It certainly is a real-time task when the GCode file has a large number of short segments in a row. Planning is even more so.

There is a big difference between what you can get away with under most conditions, and what you can get away with under difficult conditions.

-

@droftarts I was implying the intermediate software be written in such a way that it runs within DSF or externally on whole files.

So far as people getting nervous about gcode manipulation there are masses of commands that already manipulate the gcode before it is printed. The function being discussed here (clean up consecutive parrallel vectors, remove unorocessable data etc) to resolve the OP problem is little different.

-

I think we are being very weak in terminology here. An extension on the arguments used to call parsing a real time exercise could be used to argue finance software needs to be real time because accounts need to be filed at a specific date.

My understanding of real time is that something needs to be done at a specific time, within a tight tollerance, not early or late. Much like the exact timings of the step pulses to the drivers. If things fall out of sequence there at best things get noisey when worse for example the timing glitches set up resonances that stall the steppers. Parsing is time critical in the build, but it doesn't matter if the parse executes far quicker than realtime (hense it could parse a gcode and output gcode rather than data direct to path planners), so long as there is always enough parsed data to keep the path planner for the steppers full. Much like keeping the buffer full on a cd burn.

What I'm suggesting is a clean up parse of the gcode as a clean up here to sort issues like the consequtive parrallel vectors rather than have the real time processes bogged down by it which is what is happening here.

Create a cube in CAD, mesh it, slice it, and the majority of systems will give you eight vectors per slice rather than four. Add rounds to one edge on the top surface and two of the sides are likely to have a far higher quantity of vectors.

This is trash data that the processor has to work through and limits the effectiveness of look-ahead path planning. Very easy to parse out.

Yeah other data formats have been muted but they tend to require many special cases in the slicing to cope with specific geometric features. The beauty of slicing mesh data is it's far closer to one size fits all. Forcing the printer to follow that path exactly is a failure to grasp that the meah data itself is an approximation too. An ideal would be retention of information used to create the CAD, so path planners know how much they can deviate before they are introduing more error than the mesh generation.