Timelapse pictures & videos with Duet and webcam on layer change

-

@resam So I'm a noob with python and rasperry pi's but I think I mostly understand the github and what i need to do, but you said you ditched mjpg streamer and instead use OpenCv, I can't find how to setup OpenCV to host the webcam image on a url. Can I still use mjpg streamer (seems easier for a noob to setup)

-

@assasinscreed00 I don't remember saying I ditched OpenCV - I still use mjpg streamer, just like almost every tutorial on the internet.

-

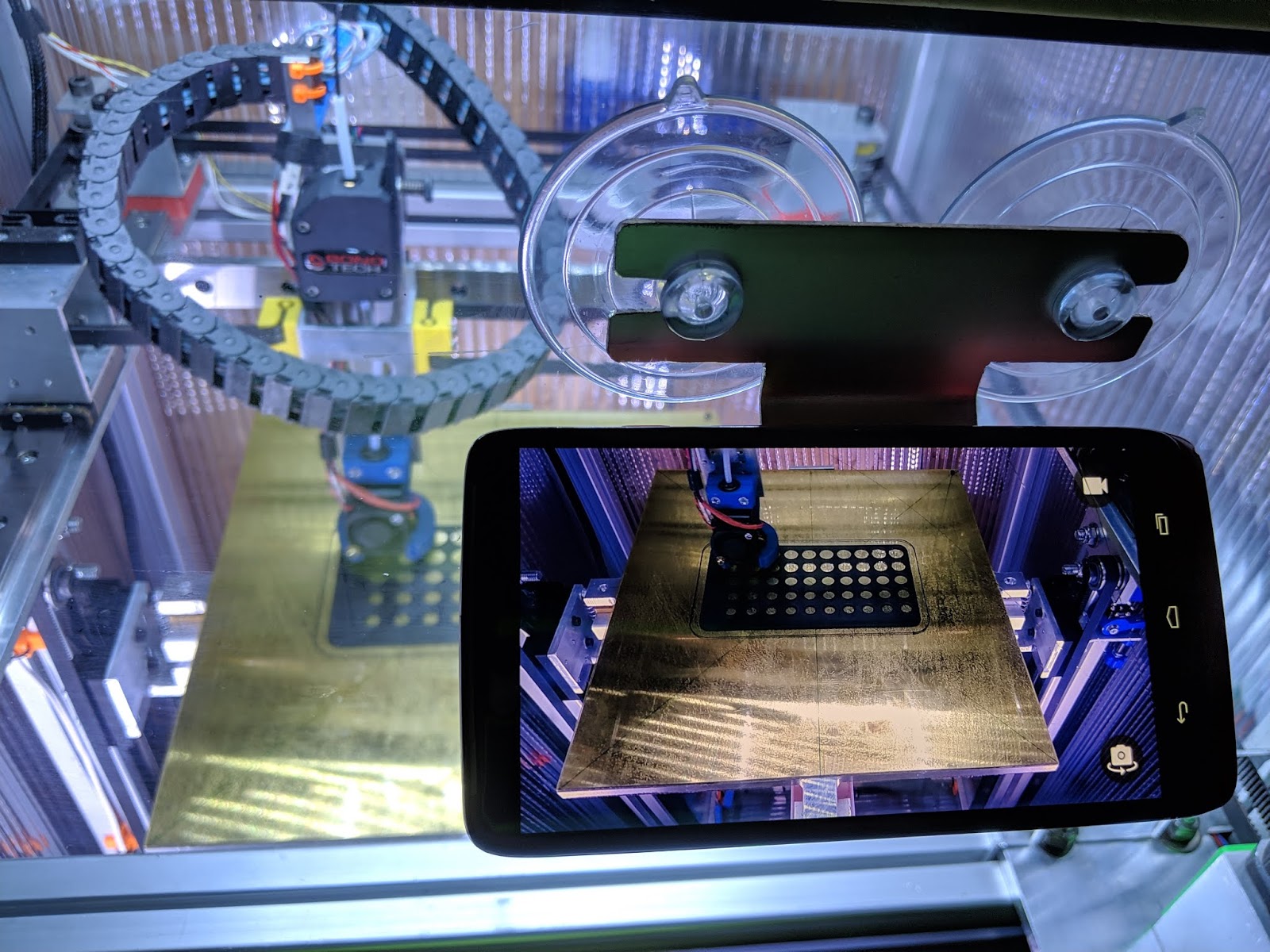

I took a different approach. I had to replace my Droid Turbo phone because it was a few years old and I had cracked the screen and some critical apps would no longer work right, so I put it to use monitoring my printer.

My printer has clear PC front doors so I made a simple suction cup mounted holder for the phone that sticks to the door and provides a good view of the bed plate. I loaded the phone with an app called Open Camera that includes the ability to program it to automatically snap images at whatever interval you desire. I then set up Google Photos to back up the images snapped by the camera. The backup happens as soon as each image is snapped.

I power the phone with a USB wall wart and usually program it it to take pictures at 30 second intervals. The phone camera can produce 24 Mp images, so much better resolution than you can ever get from a RPi camera. Open Camera optionally labels each image with the time and date it was made. If I'm running a long print I can check status by opening Google Photos and viewing the most recently uploaded image.

When the print is done, if I want to make a video, I batch scale the images to whatever resolution I want in the video using irfanview and then convert the image sequence to an .avi file using ImageJ. All the software is free and has no advertising, and no subscription fees, and all of it works reliably.

Sample image:

More here: https://drmrehorst.blogspot.com/2019/08/putting-old-cell-phone-to-good-use.html

The camera doesn't sync with layer changes, and I can't use it to kill the printer if a print fails, but that's a pretty rare event.

I set it up to make a timelapse video of my corexy sand table recently.

-

@resam said in Timelapse pictures & videos with Duet and webcam on layer change:

@T3P3Tony probably - but the idea behind using OpenCV and ditching mjpg-streamer was to REDUCE complexity

For people who want to keep their DWC webcam, you should probably still use mjpg-streamer (unless somebody knows of a similar / better tool?)

this comment is where I thought you said it, I assume I am reading it wrong?

-

Is there anything in principle preventing this from working on the older Duets, like the 0.6?

-

@aniron said in Timelapse pictures & videos with Duet and webcam on layer change:

Is there anything in principle preventing this from working on the older Duets, like the 0.6?

Could you restate what "this" is? Lots of things discussed above...

-

@Danal sorry, I was referring to the OP. Didn't realise how old it was.

"Requirements

DuetWifi or Duet Ethernet or Duet 2 Maestro controlled printer

RepRapFirmware v1.21 or v2.0 or higher" -

Ah, got it. OP will need to answer.

I've been intentionally not "stepping on toes" because both @resam and I have tools that do lapse-to-video. Each has its strengths and weaknesses.

But I will mention mine... The one linked below "duetLapse" should be able to interact with any Firmware V2 or V3. It was developed to run on a Pi, but could potentially run anywhere that Python runs. It only needs to be able to open the camera, and to reach the printer via network.

-

I don't see any specific reason why it should not work on a Duet 0.6 - as long as it runs RRF 1.21 or later and has Telnet active.

-

This post is deleted! -

Hi all,

Finally got a Raspberry Pi 4B, have it setup sand installed and working with Duet RFF Timeplase. I've got the Pi taking pics of the print on layer change.

My question is regarding the layer change script. How should I add a line to the script to tell the print head to move to a specified place before each pic, without messing up the core programming?

Thanks in advance!!

-

@Damien I have modified my set up to sync with layer change- it requires no wiring or hacks other than adding a little custom g-code on layer change. I use a bluetooth button to trigger the cell phone camera.

The custom g-code is in the printer profile tab of the slicer, so I made a layer sync specific printer profile. When I want to make layer synced video, I use that profile when I slice, otherwise I use a normal profile.

-

Hi everybody,

is that solution able to do both? Stream a video to the DUET3D web front end to check if the print is still working as expected AND creating time lapse (time based or on layer change) at the same print in parallel?

Or is it only for making time lapse?

My plan ist to get a raspberry pi (3, 4 or zero) in combination with a logitech HD920 cam.

-

@Hugo-Hiasl This mainly depends on the software you use to provide the stream. Some of these will allow you to have a continuous video stream that will just be paused for a second to take a snapshot image. I think both

mjpg-streameranduStreamersupport this. But I have never tried it. -

@wilriker Keepalive post.

In answer to your question, yes

mjpeg-streamercan be used this way and it is how theoctolapseplugin works inoctoprint. I have a Vivedino Troodon (Voron-clone) and it's clone Duet2 Wifi card is so good it has the same issues with Octoprint that the OEM board suffers. -

@wilriker

I cant speak forustreamerbutmjpeg-streamerprovides a http stream onhttp://<ip:port>/?action=streamand can accept multiple connections. I regularly have it streaming on my desktop and media-pc simultaneously without issues or any noticeable load on the Pi.OctoPrint's inbuilt timelapse system, (or octolapse , if you love needless complexity) requests snapshots via

http://<ip:port>/?action=snapshot, there is no detectable pause on the streams when this is invoked.Conventionally: To get a timelapse you use a script to capture frames at set intervals/events, timestamp them if desired and save them. Once the capture is complete you stitch them together using ffmpeg.

However.. when I want a timelapse on my CNC I start a screen session (nohup shell) on it's attendant Pi and run this:

pi@laserweb:~ $ ffmpeg -r 600 -i http://localhost:8080/?action=stream -vf "drawtext=fontcolor=white:fontsize=16:box=1:boxcolor=black@0.3:x=(w-text_w-10):y=(h-text_h-5):expansion=strftime::text='CNC \:\ %H\:%M\:%S'" -r 20 lapse.`date +%Y%m%d%H%M%S`.aviWhich tricks

ffmpeginto thinking that my 30fps stream is really a 600fps stream, and the asks it to timestamp that and convert to a 20fps framerate.

The end result is a 20x speedup video with timestamp, but the quality is noticeably lower than the capture/assemble method. And the Pi3 is devoting 32->40% of it's CPU to ffmpeg while it is running. Not recommended as a permanent solution, it's a classic quick'n'dirty one liner.