Do we know what release will have variable support?

I've been getting into customizing various aspects of my printer, and in a few scenarios variables are pretty much mandatory.

Are we looking at 3.2, 3.3, something even further out?

Do we know what release will have variable support?

I've been getting into customizing various aspects of my printer, and in a few scenarios variables are pretty much mandatory.

Are we looking at 3.2, 3.3, something even further out?

@dc42 I don't know how you keep your cool, You must have the patients of a saint.

As a developer, I'd most likely end up in a physical altercation, if I had to deal with some of the garbage people regularly throw your way.

@gloomyandy said in no keep alive support?:

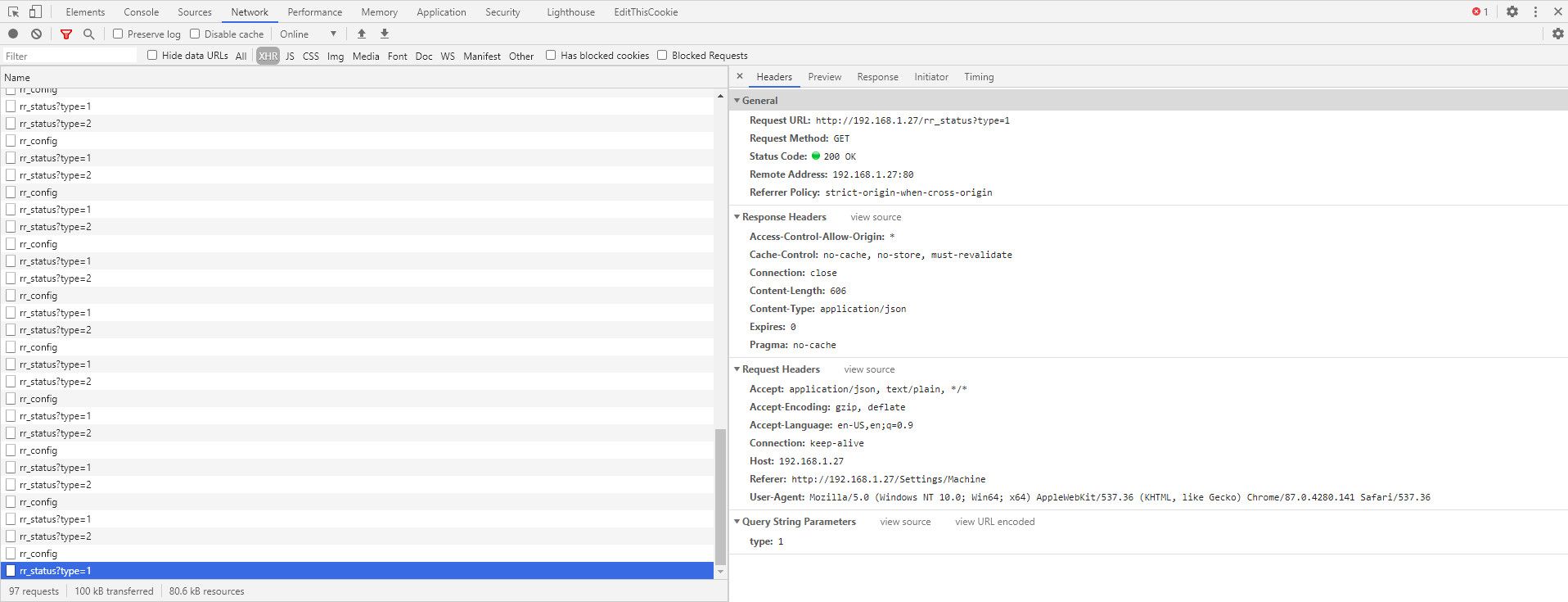

I'm not sure if DWC makes use of this feature but I suspect it may for some of the more frequent operations.

DWC/Chrome is asking for it. request header "Connection: keep-alive"

however the server always responds with a response header "Connection: close".

I have yet to see it allow keep-alive.

(for more details see: https://github.com/Duet3D/RepRapFirmware/blob/dev/src/Networking/HttpResponder.cpp#L448).

If i read that properly the code already has everything it needs to handle persistent connections, as it's inspecting the request headers in HttpResponder::SendJsonResponse

see line 986 through 999

https://github.com/Duet3D/RepRapFirmware/blob/dev/src/Networking/HttpResponder.cpp#L986

however when HttpResponder::SendJsonResponse calls HttpResponder::GetJsonResponse the first thing it does it set keepOpen = false;

https://github.com/Duet3D/RepRapFirmware/blob/dev/src/Networking/HttpResponder.cpp#L450

so the if statement that starts on 987 never gets run.

assuming i'm reading this properly, I haven't worked with c/c++ in almost 20 years.

@deckingman said in Whats does the roadmap look like for variables?:

@pixelpieper This isn't a matter of which new features should take priority. This is a case of restoring basic functionality that already exists in version 2 firmware to version 3 firmware before adding new features.

I'm a developer by trade i think you need to consider a few things!

I've been working on this project off and on for a while, and I'm finally at a point where I can share it.

gLapse is a Python based timelapse application designed to run on a Raspberry Pi, fitted with an official camera module. It differs from most timelapse applications, in that it doesn't generate a video as its output. Instead, it stores a sequence of still images that can be downloaded and used to generate a video using a 3rd party application.

stills have a wider range of encoders and quality options available. They are only limited by the resolution of the sensor, and the storage capacity of the SD card used. In short, stills allow for higher resolution and higher quality video, but require the additional step of post processing in a 3rd party application.

Here is a sample 4k time lapse that was generated with stills captured with a Raspberry Pi Zero W equipped with an HQ camera module.

https://www.youtube.com/watch?v=bqkPgWrF3Eo

if you are, you can increase your settings and run the test again. If you aren't then you missed steps, so cut the current values down by whatever safety margin you want and you will have your maximums.

make sure you run each test several times before moving on to higher values.

Here is an example i used for a core xy machine.

G21

G90

M82

; move to position slowly

M201 X250 Y250 Z100 E1500 ; Accelerations (mm/s^2)

M203 X6000 Y6000 Z900 E3600 ; Maximum speeds (mm/min)

M566 X200 Y200 Z100 E1500 ; Maximum jerk speeds mm/minute

G28 XY

G4 S2

G1 X280 Y280 F3000

G4 S2

M400

; rapid back fast

M201 X4500 Y4500 Z100 E1500 ;accel

M203 X24000 Y24000 Z900 E3600 ;speed

M566 X1500 Y1500 Z100 E1500 ;jerk

G1 X10 Y10 F13500

G4 S2

M400

; go home again slow

M201 X250 Y250 Z100 E1500 ; Accelerations (mm/s^2)

M203 X6000 Y6000 Z900 E3600 ; Maximum speeds (mm/min)

M566 X200 Y200 Z100 E1500 ; Maximum jerk speeds mm/minute

G1 X0 Y0 F3000

G4 S2

M400

M119

@deckingman said in Whats does the roadmap look like for variables?:

I'll bear that in mind but I suspect anyone who bought the latest generation of a product such as a 'phone would be somewhat miffed if many of the bundled apps (which worked on the previous version and are still bundled with the new version), didn't work.

The underlying hardware architecture for phones hasn't changed in a long time though. I think almost every phone on the market is based on ARM. If the next generation was x86 based, people would go mad because all kinds of things would break.

If people don't want to deal with issue, then they shouldn't be an early adopter. Doesn't matter if it's phones, sbcs, tvs, cars etc.

stls and f3d files are available on my blog post about the case under reference.

@gloomyandy said in Simple way to set Acceleration and Jerk?:

Will the motor basically start at the jerk speed and then use the acceleration values to increase speed from that point to the target or is it more complex than that?

@dc42 might be able to give a better answer, but I would say the above is a good straightforward way of looking at it.

If using a CoreXY type design would it make sense to perform the tests using diagonal moves (which just use a single motor)?

Imo, yes, that's what i did in the script above for my Core-XY.

@CaLviNx said in Whats does the roadmap look like for variables?:

As a user I expect the "evolution" equipment (that I have paid for) to "fit for purpose" its not as if the end user is forcing the supplier into supply, but the supplier does use the "evolution" features to entice existing (and new) users into making a purchase.

No one is forcing you to buy it, and it's on you if you fall for a sales pitch, or can't control your consumerism!

I still think it would be really nice to have.

If it existed, it would already be in the start-up gcode of every print i do.

@jens55 said in Meta commands:

To skip an iteration ???? Where did you see this in the documentation ???

I look at 'continue' as a command that strictly exists as a means to improve readability of the code since the command itself seems to do diddly squat and can be left out.

To be clear it doesn't skip an iteration, it skips everything after the continue statement in the current iteration. It goes back to the top of the looping structure and starts the next iteration. Every programming language I can think of works this way.

@alankilian said in Meta commands:

@jens55 said in Meta commands:

nested loops will be a nightmare.

Why?

Each loop gets its own iterator (which is what iterators ARE) so everything works out.

They are all called iterations.

If use want to use multiple of them to set a variable, the code would be very hard to read (assuming scoping even allows you to access all of them).

The following would be real confusing to follow if you replaced a, b, and c with iterations .

var x = 0

var a = 0

var b = 0

var c = 0

while a < 10

set var.b = 0

while b < 20

set var.c = 0

while c < 40

set var.x = (a * b) - c

set var.c = c + 1

set var.b = b + 1

set var.a = a + 1

It's just a guess, but since no commands are being run when the if statement fails interations might not get incremented (maybe a bug).

As a developer I'm not sure i like the concept of an iterations variable.

the documentation says this about it.

iterations - The number of completed iterations of the innermost loop

If you have nested looping structures it could get difficult to follow the code.

if i have all the syntax correct, something like this is more standard in the software development world.

var cnt = 0

while cnt < 10

set var.cnt = cnt + 1

m118 s"hello world"

g4 s2

stls and f3d files are available on my blog post about the case under reference.

@stuartofmt said in gLapse: a time lapse application:

@kb58 said in gLapse: a time lapse application:

..... yeah I can see how a dedicated camera and interface would be better.....

These programs evolved to satisfy needs such as: monitoring and taking timelapse from, often, lengthy prints; not needing to be there to start taking the time-lapse; optionally parking the hotend for each image; capturing from more than one printer at a time; etc. etc.Not to mention way cheaper in absolute terms and not tying up your smartphone

I agree with all of this.

The only thing I'd add is that imo going with a Pi and a pi camera module gives you a lot more freedom freedom than off the shelf webcams and phones. You can customize the hardware and software to your heart's content. For example you could rig up a NoIR module and an IR light source and shoot in a lights out environment. for stills you have a lot of output options (jpeg, png, gif, bmp, yuv, rgb, rgba, bgr,bgra). the amount of customizations available is truly extensive.

This is my pi zero w and hq camera module in a custom 3d printed case of my design. all i have to do is plug in an external wifi (onboard one is not good) or ethernet adapter, and give it power.

I've never used duetlapse3 so take all the following with a grain of salt.

duetlapse3 supports Raspberry pi camera modules, and web cams, gLapse only supports pi camera modules.

duetlapse3 is using ffmpeg and libx264 on the pi to generate the video (if you want it to). That's cpu based encoding and thus would murder lower end Pi (like a zero ) if you tried to encode a 4k video. gLapse expects you to generate the video yourself on a more powerful machine. duetlapse3 is simpler if you don't know anything about encoding video, gLapse ultimately gives you more flexibility but you need to know more.

duetlapse3 requires RRF V3+, gLapse will work with version 2 or 3 in standalone mode. I will eventually add support for v3 fronted with an sbc.

duetlapse3 captures images based on time, layer change, or pause. gLapse just looks for specific M-codes to tell it what to do.

Those are probably the major differences.

eventually I plan to write an app that combines gLapse and my streaming app, so that you can do everything from a single application.

I've been working on this project off and on for a while, and I'm finally at a point where I can share it.

gLapse is a Python based timelapse application designed to run on a Raspberry Pi, fitted with an official camera module. It differs from most timelapse applications, in that it doesn't generate a video as its output. Instead, it stores a sequence of still images that can be downloaded and used to generate a video using a 3rd party application.

stills have a wider range of encoders and quality options available. They are only limited by the resolution of the sensor, and the storage capacity of the SD card used. In short, stills allow for higher resolution and higher quality video, but require the additional step of post processing in a 3rd party application.

Here is a sample 4k time lapse that was generated with stills captured with a Raspberry Pi Zero W equipped with an HQ camera module.

https://www.youtube.com/watch?v=bqkPgWrF3Eo

@dc42 What about M82? By default my slicer is in absolute mode, and thus i have to have an M82, and G92 E0 in my startup code.

Her is the first printing move from a sample file.

G1 F6000 X132.678 Y131.744 E0.0230

and here is the last one form the same file.

G1 X132.186 Y132.177 E784.9001

I'm working on something that requires momentarily circumventing the print process, before resuming after the task is complete.

Does G60 store the extruder position in addition to the normal axis information?

I did some testing last night, and it didn't seem to be the case, but it might just be that I need to add in additional retraction etc to my senario.